As a musician, engineer, and web developer, it has been very exciting to watch the Web Audio and WebRTC APIs mature. A robust platform for audio on the web has the potential to usher in a new era of collaborative music creation and social audio sharing. While native desktop DAW applications such as Pro Tools, Logic, Ableton Live, FL Studio, GarageBand, Digital Performer, and Cubase have grown more powerful and flexible over the last several years, facilities for collaboration and social sharing haven’t really kept pace.1 Perhaps the DAW of the future will be a web application, something like the audio equivalent of Google Docs.

I can dream. Or I can take things apart, see how they work, and then build new things.

recorder.js

In this post, I’ll walk through a popular demo of recording with the Web Audio API. In the process, I’ll touch on the basics of the Web Audio API, Web Workers, and WebRTC, a trio of fledgling web technologies that combine to allow us to record and process audio in the brower.

The AudioRecorder demo is simple recording interface comprising three parts: an animated spectrograph of the client’s current audio input, a record button that starts and stops recording audio (and displaying the recorded audio’s waveform) from that input, and a save button that allows the user to download the recorded audio as a WAV file. I’m just going to focus on the latter two here, those responsible for recording audio, displaying the audio’s waveform while recording, and finally saving the recording. Most of this functionality comes from Matt Diamond’s fantastic recorder.js plugin.

Part 1: main.js

You can follow along with main.js here.

Like most JavaScript that takes advantage of bleeding-edge browser features, this application’s Javascript begins with a whole bunch of feature detection to make sure that the browser is capable of animating the audio display and obtaining an audio stream using WebRTC. At this time, the WebRTC is partially implemented in some browsers and not supported at all in several browsers. It provides access to a video and/or audio stream from the user using navigator.getUserMedia(), which takes a parameter of constraints. The constraints are either optional or mandatory. In this case, the recorder is using the mandatory constraints to disable a handful of default audio behaviors in Chrome.

Now we get into the Web Audio API, which allows us to work with audio in the browser. It is quite well-supported in modern browsers. Much as the HTML <canvas> element allows us to get a 2D or 3D drawing context in JavaScript, the AudioContext() constructor gives us a context in which we can connect sound sources and destinations (which the Web Audio API collectively calls AudioNodes). Everything that the Web Audio API does happens within AudioContext objects, which are directed graphs of AudioNodes that are responsible for receiving, producing, transforming, and emitting audio signals.2 In this way, it works similarly to my beloved Minimoog compact modular synthesizer from the 1970s, with its oscillators, mixer, and filters, or to a recording studio with its many microphones, processors (EQ, compression, effects, and so on), mixing console, and recording devices (tape machines and computers).

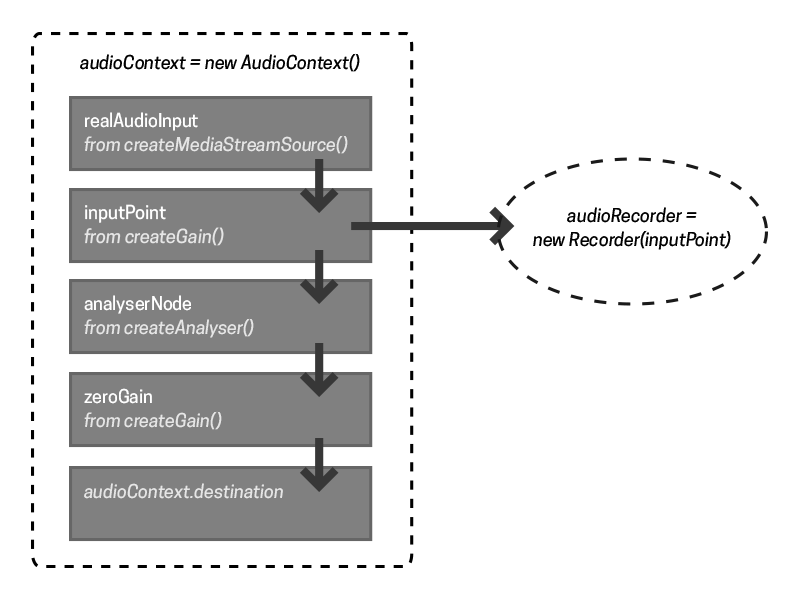

In this case, the callback passed to getUserMedia(), gotStream(), is called when the stream is successfully obtained and goes about creating a bunch of AudioNodes on the context and connecting them. There’s an inputPoint node for controlling gain, a realAudioInput for actually getting the audio, an analyserNode for performing a Fourier transform on the audio (this is for displaying the spectrograph or “analyser”), and finally a zeroGain node that is the endpoint of the audio in the context. The recorder just records audio and doesn’t actually output any audio directly, so the zeroGain just receives the audio at the end of the chain and silences it. Right before that connection is made, a new Recorder object is created with the stream from getUserMedia() as its source.

Okay, so that covers the initialization of the application. The rest of the application, save the analyzer that I won’t cover in detail here, is event driven. The toggleRecord() function is triggered by the click event on the record button, which in turn either stops or starts the recording.

We’ll start with the recorder activation. clear() is called on the instance of Recorder created in gotStream(), followed by record(). The methods are implemented in Matt Diamond’s recorder.js.

Part 2: recorder.js

The Web Audio API provides many pre-built AudioNode objects that cover most use cases that are generally written in a lower-level language than JavaScript (usually C or C++). However, it’s also possible to create your own processing object directly in JavaScript using the createScriptProcessor() method of AudioContext, which is how Mr. Diamond deals with recording audio in recorder.js.

In addition, Mr. Diamond’s recorder.js makes use of the Web Worker API, another relatively new browser API that gives developers the ability to run background processes. The API, which is quite well-supported in modern browsers, exposes a constructor function, Worker(), that takes an argument of the JavaScript file that will run on its own thread in the background. The worker in recorder.js is assigned to the recorderWorker.js file, which we’ll get to in a moment.

The Recorder() constructor begins by creating a script processor and setting a buffer length for recording audio. Communication between a worker and the script that spawned it is achieved through message passing, so this function then passes the ‘init’ message to the worker along with a config object that specifies the sample rate.

The worker script contains all of the guts of the recorder. The entire process of passing audio from the left and right channel input buffers to the recording buffers and creating a WAV file from those buffers is encapsulated in this worker. The switch statement on the ‘onmessage’ event at the top of the function, which receives the ‘command’ and ‘buffer’ parameters sent from recorder.js, controls the workers behavior - the ‘record’, ‘exportWAV’, ‘getBuffers’, and ‘clear’ messages kick off those actions in the worker.

Intermission

I’ll be updating this post with more details on this worker and the recorder.js flow at a later date. In the meantime, make some noise at your laptop.

More Resources

Web Audio API at MDN: https://developer.mozilla.org/en-US/docs/Web/API/Web_Audio_API

Tutorial at HTML5 Rocks: http://www.html5rocks.com/en/tutorials/webaudio/intro/

-

Soundcloud and Bandcamp are fantastic platforms, but because neither of them provide any creation tools (unlike platforms like Instagram and Vine), they’re largely out of reach for non-professional users. ↩

-

There’s more about the audio graph in Boris Smus’s excellent book on the Web Audio API. ↩